An Intelligence Artificer

A field manual for technologists working with AI as a medium

I called myself an “Intelligence Artificer” on LinkedIn as a tongue-in-cheek joke. Mostly for self-amusement, which is usually reason enough. But the more I thought about that framing, the more I clung to it.

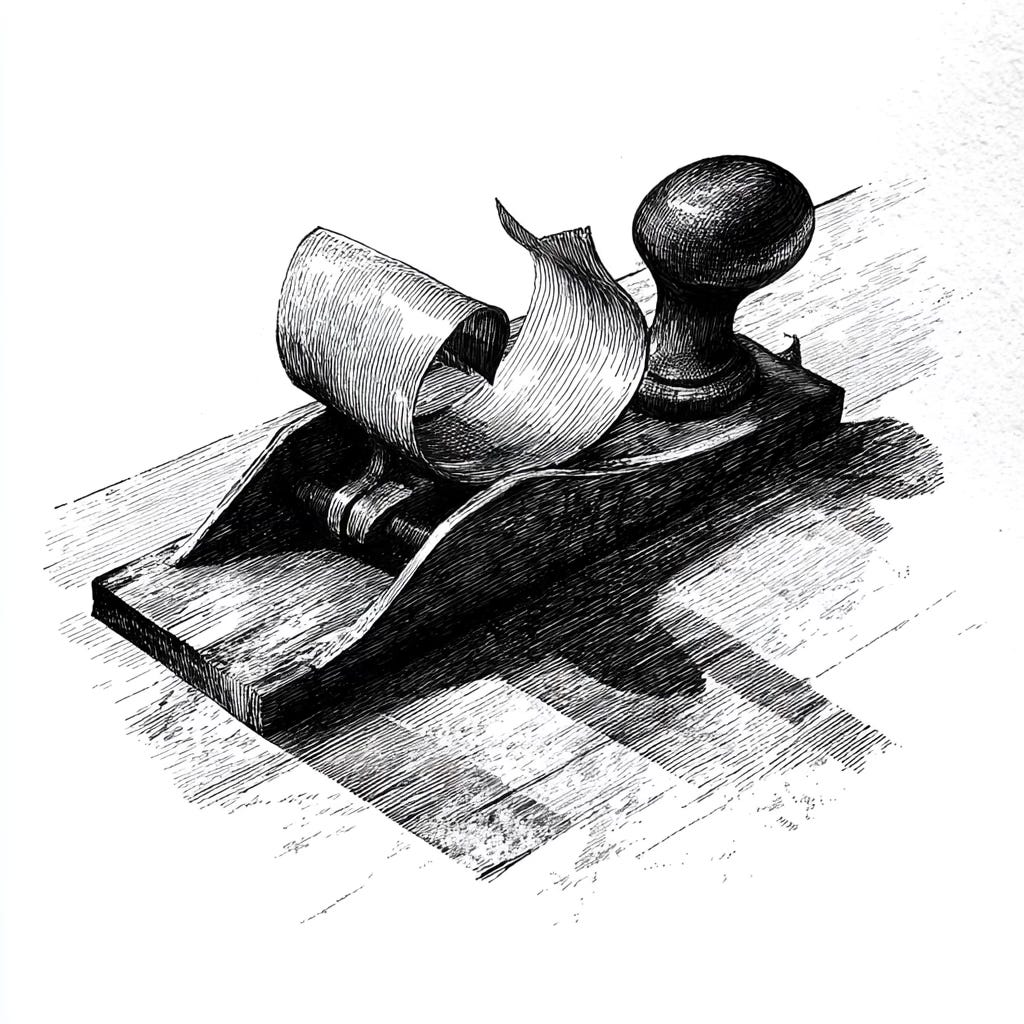

The word artificer is old and dusty. Anachronistic enough that it’s now a class in Dungeons & Dragons. It shares roots with artificial, from the Latin:

ars (art) + facere (to make).

An artificer is a working builder. Someone who shapes material into form.

And lately that material is machine intelligence. Models, embeddings, context windows. Attention.

AI is not automation. It doesn’t “do what it’s told.” It reacts and suggests. It can’t be commanded like a script. It has to be worked. Shaped, prompted, corrected, and sometimes yelled at — if the mood strikes.

This post began as a manifesto, but I think of it now as a field manual. It’s not a thinkpiece, nor a roadmap. It’s for practitioners — people integrating AI into systems, workflows, and tools. Not as a hype additive, but as a material with real properties, real constraints, and real utility.

Why this framing?

There are enough diagrams and LinkedIn thinkfluencers out there trying to rebrand prompt engineering every six months. I’m not interested in that.

The framing is simple: AI is a material. Like timber or electricity or ink. It has affordances. If you understand it, you can build with it.

This means combining classical software practice — systems thinking, modularity, observability — with some newer disciplines: prompt engineering, context shaping, orchestration, evaluation.

You don’t need a PhD, but you do need to understand:

the shape of the API you’re calling

the difference between inputs and system prompts

how tokenization and context windows behave

when to reach for a model, and when not to

The rest is craft.

Field Practices

I’ve been refining these for my own work. These aren’t commandments; just patterns that have helped me build more reliably and avoid the worst surprises.

Curate, don’t abdicate

AI doesn’t make decisions. You do. Your job is to frame the problem and shape the output. Give it guardrails, provide examples, set expectations. Narrow the scope. Even when using an agent framework, design the moments where control hands back to a human.

🙂↕️ Do: Use a dropdown or buttons to frame user intent and structure input before passing it to the model. Inform the agent what inputs are valid in its system prompt.

🙂↔️ Don’t: Let the user type freeform input and pray the LLM interprets it correctly.

Build from first principles

You don’t need to know how back propagation works. But you should know:

how latency will impact responsiveness

how your model handles memory and state

whether you’re running a chat or completion model

what’s happening between input and output (tokenization, windowing, streaming)

If you’ve never read the full request/response body from your AI provider, start there.

💁♂️ Pro tip: Curl your OpenAI or Anthropic request in the terminal and actually read it. Unfamiliar with Curl? Ask Claude Code to provide you a curl command.

Own your toolchain

You can use GPT, Claude, Gemini, DeepSeek, whatever — but know how it’s integrated. Know how to switch models without rewriting your app. Know how to downgrade gracefully when latency spikes or tokens run out.

🙂↕️ Do: Abstract your model provider behind a common function or class early.

I use Claude for its coding prowess and summarization. I use GPT for loose prose. But I often start with local models using LMStudio or Ollama. A small embedded Mistral model will often do the job faster and cheaper, and helps you understand the distinctions. You won’t know until you run them side by side.

Bias toward locality

There are good reasons to use the cloud. The frontier models are impressive and their APIs are battle tested. But they come with cost, latency, and opacity. Local models offer speed, privacy, and cost savings. They let you run at the edge — and they force you to trim fat.

You’ll think more clearly when you work with smaller models first.

🥞 My stack: I prototype with LMStudio or Goose + Ollama. I use ChatGPT for general purpose discussion and project organization, and Claude desktop when I want to discuss a subject more technical in nature or to produce small artifacts. I use Warp as a super-powered terminal and Zed for day-to-day development (not Cursor — shocking).

Prefer small, sharp tools

Don’t try to build “an AI agent that helps me do everything.” Start with a single, composable task. Adhere to the Unix philosophy.

Write one script that:

takes an audio file

transcribes it with whisper.cpp

runs a summarization model

outputs a markdown log

Test that. Then improve it. Maybe it becomes a workflow, or maybe not. Either way, it’s fine.

🙂↕️ Do: Chain small programs. Pass structured output. Iterate one hop at a time.

🙂↔️ Don’t: Build a tree of nested tool calls before anything works, or create a monstrous single-shot prompt that exhausts your context window before you even get to the real task at hand.

Observe your systems

If you’re not logging inputs and outputs, you’re just guessing. Use evals, not vibes. Track behavior. Tag edge cases.

Use eval frameworks or just write your own diff tools. Keep a copy of what the AI saw and said. Debug like a software engineer, not a fortune teller.

🙂↕️ Do: Store prompt + response + system state. Build a local dashboard.

🙂↔️ Don’t: Rely on vibes and screenshots to debug production behavior.

Aesthetic matters

Even prompt design is a craft. Tone matters. Word choice matters. Whether you ask in bullet points or full sentences matters. The structure of your interface affects the shape of the output.

And your logs matter, too. Craft is visible.

The difference between automation and augmentation

This is the line I see a lot of people miss. They confuse “AI” with “more automation.”

⚙️ Automation is: do the same thing faster, cheaper, more consistently.

🕰️ Augmentation: add responsiveness, flexibility, context — a human-like layer of dynamism.

Most of my systems are built around automation. But within those workflows are augmented steps or sub-routines.

That’s the leverage point. Dynamism is the superpower of AI. It gives you flexibility without hardcoding. It lets you build systems that behave differently depending on the data, the context, or the user’s intent. You can change behavior without redeploying. You can prototype new UX by changing a prompt. That’s what makes it interesting and why I am still excited.

But that’s also why the work matters. These are not simple tools — they have edges. They need shaping. They require judgement. They call for a builder’s hand.

They call for an artificer.

Love it. Not only should we start using this on LI, but I think we should adopt the D&D levels too: 7th-level Code Artificer.

Questions: thoughts on Claude Code or Charm Crush yet? Also, do you have a dedicated machine for testing models locally?