Prompt Adherence, or Your Tokens Back

Was your AI's output useless or greedy? You may be entitled to financial compensation.

Sometimes, I’ve said swear words. I’m not proud of it, but I said a cuss at my AI assistant. Multiple times. Sometimes out loud.

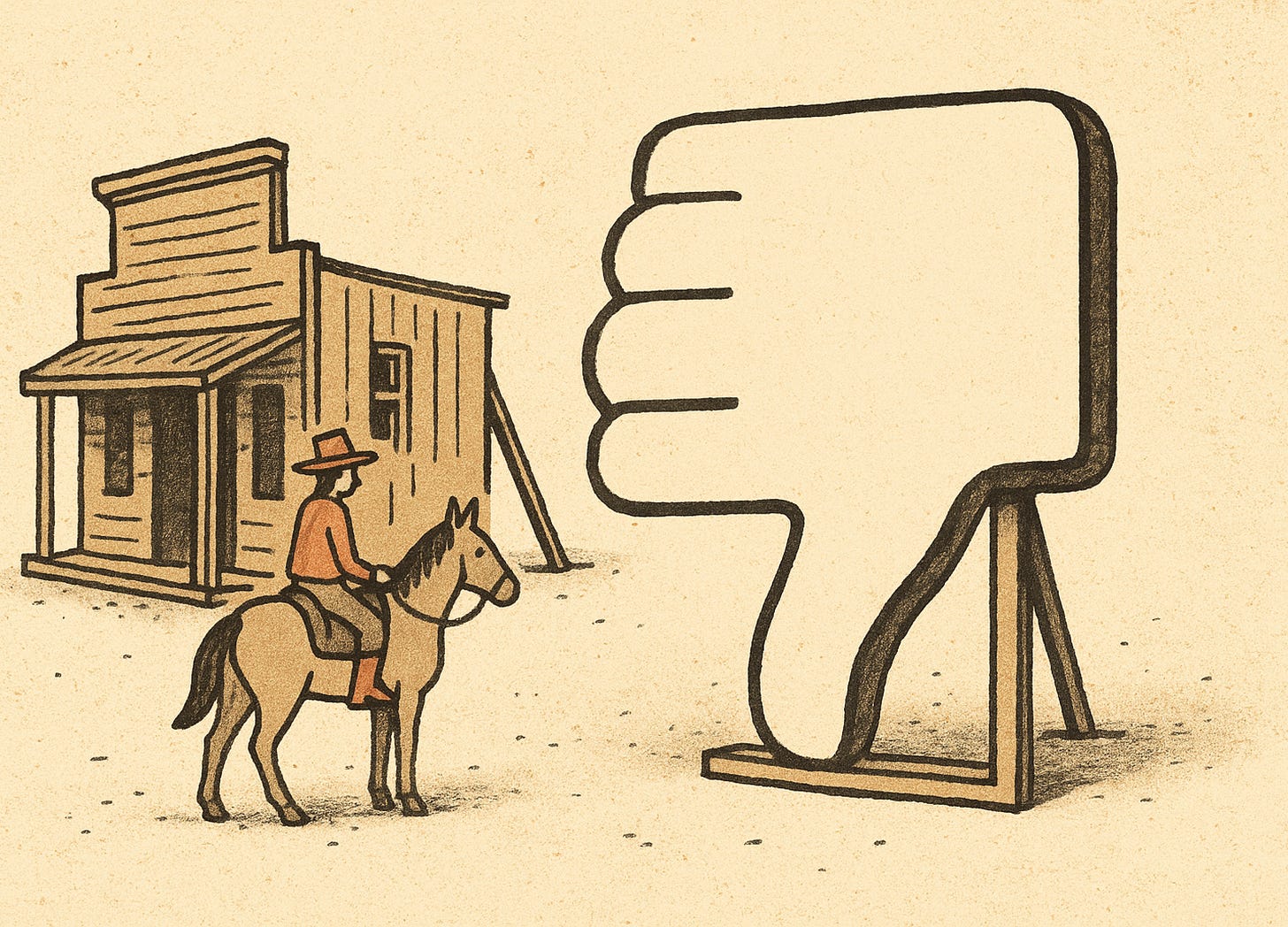

When an agent keeps drifting from the task — rewriting code I didn’t ask to be touched, ignoring constraints I carefully spelled out — the frustration builds fast. The only sanctioned outlet is a tiny little thumbs-up or thumbs-down icon.

What happens after I click the button? I have no clue. Does it adjust the model for me personally? Is it registered anywhere? Am I yelling into a void? Whatever reinforcement loop exists is opaque, and certainly not tuned to my needs in the moment.

What do humans do?

If I were working with another engineer, there would be a process for this. Code reviews, pairing sessions, mentorship programs. Even the Byzantine systems — ticket approvals, QA gates, sign-off chains — exist to hold people accountable and ensure the work meets the specification.

With AI agents, there’s no process. No mentoring, no escalation path. Just start over or give up.

The vibes are off

Fred Benenson, in his excellent post The perverse incentives of Vibe Coding, writes about the way AI-assisted coding can trap you in a “just one more prompt” loop. That feeling that you’re always one nudge away from the perfect solution. It’s addictive, but it’s also wasteful.

He notes that these systems often produce bloated, over-engineered solutions where a human might write something lean and elegant. In one case, his own hand-coded algorithm was far shorter (and actually functional) compared to an AI version that was hundreds of lines longer and still broken.

Here’s the core misalignment: users want a working, correct solution that addresses their problem as directly as possible, and LLM providers make more money when more tokens are generated.

More tokens processed = more revenue for the company behind the AI

When verbosity is profitable, there’s no financial incentive to cut bloat. “Close enough” becomes the norm, and users pay for the gap.

Accountability at inference time

In AI research, “alignment” usually means shaping a model’s behavior during training so it’s safer, more truthful, or more helpful. Fine-tuning strategies, reinforcement learning setups, and safety guardrails are part of the art and science of model development. It’s very important… and it happens in the lab, long before the model ever touches a real user’s workflow.

Alignment at training time doesn’t guarantee alignment when it counts: during inference. That’s the moment the model is running, I’m paying for every token, and I’m depending on it to do the thing I actually asked it to do.

In that moment, there’s no meaningful accountability. The provider gets paid whether the output nails the prompt or veers wildly off-course. The cost — both the direct cost of wasted tokens and the indirect cost of lost time — lands squarely on me.

This isn’t just an alignment problem, it’s a service problem. Good service means the customer leaves satisfied, confident they got what they paid for; not overpaying and underdelivered to. Service here also means the user gets better over time. Which means making the system transparent enough to show what worked, what failed, and how to prompt more effectively next time. A system that fails to guide its user is just as broken as one that fails to follow the prompt.

If we care about making these systems genuinely useful, the alignment conversation has to move beyond training and into runtime, with mechanisms that hold the system financially and operationally responsible for meeting the user’s requirements. Only then do incentives point in the same direction as the user’s success. Only then do these systems start behaving like they’re in the business of serving the customer.

A Runtime Feedback Contract

What I’m imagining is AI systems having a financial stake in prompt adherence. Here’s some thoughts about how it could work:

Inline Evaluations. Check outputs automatically for format, content, and correctness before billing. If they fail, regenerate at the provider’s expense.

Post-hoc QA. Let users flag bad outputs. Put that thumbs-down button to work. Automated checks verify and credit wasted tokens.

Token Efficiency SLAs. Commit to a ratio of “useful tokens” vs. billable tokens, with refunds if the bar isn’t met.

Ledger-Based Billing. Track both total tokens and “approved” tokens. Only bill for the latter.

This wouldn’t just be about fairness, or reducing my swear words per thread. It would shift the incentives. Providers would be rewarded for delivering correct, concise results and penalized for deviation.

Why it matters

Accountability at inference time would do more than just make providers eat the cost of wasted tokens. It would align incentives so everyone’s pulling in the same direction. The provider profits only when the user succeeds. It would build trust through transparent accounting for accuracy and efficiency, and it would raise the bar by turning prompt adherence into a measurable, financially-backed guarantee.

And just as importantly, it would train the human on the other side of the screen. A well-designed feedback loop doesn’t just catch errors, it teaches you what a “good” prompt looks like and how evaluations work, until those practices become second nature.

Until then, the thumbs-up / thumbs-down buttons will keep feeling like placebo switches. A polite ritual for feedback that changes nothing in the moment.

The cussing will continue until an artificial approximation of morale improves.